Social media platforms have become battlegrounds for influence, information warfare, and censorship. Among the most sophisticated actors exploiting these platforms are Russian-linked bot networks, which use targeted campaigns and automated reporting tools to silence critics, manipulate narratives, and trigger account suspensions. I know, my Instagram account is a frequent target of theirs.

How Russian Bot Networks Operate

The world is ending! You may never recover from this! And all your kids will be born with red hair!

Your first indication that your Instagram account has been banned is an email like this:

Oh sure, eventually you’ll get an email like this:

But the overly alarming language really makes it seem like a phishing attack, and even if it isn’t, it conditions users to ignore messages like this. And all because social media networks like Instagram are so easily tricked by automated Russian bots.

Bots and butts

Russian bot networks consist of both automated accounts (bots) and human operators (“trolls”, aka “butts”). Bots are programmed to post, like, share, comment, and, crucially, mass-report content. Trolls coordinate campaigns, create narratives, and direct bot activity. Recent advances in AI allow these networks to create convincing fake profiles, making detection and removal more challenging. Of course, many of these operations are linked to Russian state entities, such as the Internet Research Agency (IRA), Federal Security Service (FSB), and General Staff Main Intelligence Directorate (GRU).

Targeted Campaigns and Automated Reporting Tools

Who are the targets?

Targeted campaigns are coordinated efforts to suppress, discredit, or remove specific voices from social media. The targets are often journalists and activists critical of Russian authorities, independent media outlets, and accounts discussing sensitive topics like cybersecurity, human rights, or Russian political opposition.

How Automated Reporting Tools Work

Mass reporting script tools allow a single operator to deploy thousands of bots to file complaints against a specific account or post in a very short time. The bots mimic real users, making it appear as if a large community is flagging the content. The campaigns are often organized in private groups on platforms like Telegram or Discord, where participants share targets and synchronize attacks. The effectiveness of these tools relies on overwhelming the platform’s moderation system with sheer volume, not the legitimacy of the complaints.

Why Is Mass Reporting Effective?

Platforms like Instagram rely heavily on automated systems to process millions of reports daily. A sudden surge in complaints can trigger account suspension or content removal before a human moderator intervenes. Due to the volume of coordinated false reports, even content that does not violate guidelines can be taken down. And it seems as if the social media platform’s AI isn’t yet advanced enough to identify when an account is a frequent target of Russkis.

Examples of Russian-Linked Bot Services

Meliorator

Meliorator is a covert, artificial intelligence (AI)-enhanced software package developed and used by Russian state-sponsored actors—particularly affiliates of RT (formerly Russia Today)—to conduct large-scale disinformation and influence operations on social media platforms. It has been central to recent Russian bot farm activities, targeting audiences in the United States, Europe, and beyond since at least 2022.

Key Features and Capabilities

Meliorator can generate thousands of realistic, AI-driven social media accounts (“souls”), each with unique biographical data, interests, and political leanings. These bots can post, like, follow, comment, and repost, mimicking genuine user behavior to evade detection. The tool can mirror and amplify narratives from other bot personas, perpetuating false or misleading information and reinforcing Kremlin-approved messaging.

Operators can script “thoughts”—automated actions or posting scenarios—to coordinate bot activity for maximum impact. Meliorator even includes methods to mask IP addresses, bypass two-factor authentication, and modify browser user agent strings, making bots harder to trace and block.

Operational Structure

| Component | Function |

|---|---|

| Brigadir | Administrator panel and graphical user interface for managing bots and their activities |

| Taras | Back-end seeding tool using .json files to control bot personas and automate scenarios |

| Souls | AI-generated fake identities forming the basis of each bot |

| Thoughts | Automated actions/scenarios executed by bots (e.g., posting, sharing, following) |

Russian-Language Botting Services

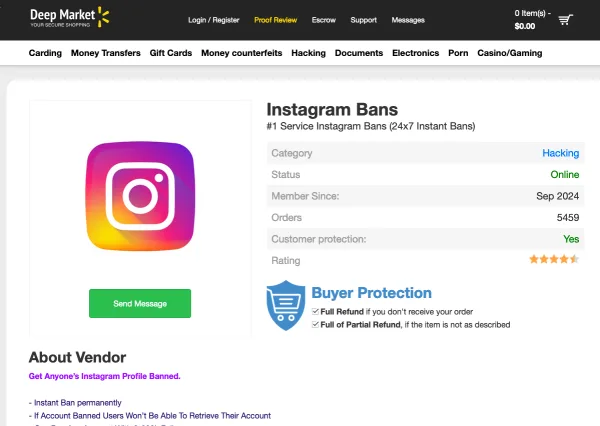

Pretty much anyone can get an Instagram account banned. Numerous underground forums and marketplaces offer services for mass reporting, spamming, and account manipulation, often advertising their ability to bypass platform defenses and guarantee account suspensions. The screenshot above is an advert for a Instragram Bans tool in the Deep Market onion website.

Why Companies Like Instagram Struggle to Stop This

The sheer scale of content and user activity forces platforms to use automated moderation, which is easily overwhelmed by coordinated mass reporting attacks. Advanced bots and AI-generated profiles are increasingly difficult to distinguish from real users, complicating detection and enforcement. And unfortunately, investigating each report manually is unfeasible at scale, leading to reliance on algorithms that can be manipulated by attackers.

Notable IP Addresses Linked to Russian Bot Operations

If you’re curious, interested, or just bored, here’s a list of Russian bot IP addresses that are reportedly linked to Russian bot networks. Firewall them off (inbound) or work through a cloud proxy because they are notorious for DDoS responses to probing.